Concept Extraction, Definition, and Visualization from Large RDF

In our last post, Nick talked about how n-dimensional (for n small) real-valued representations of relational data can be constructed in a semantically rich way. These fixed length representations can then be fed into more traditional machine learning algorithms, which often require this form of input. One such example which Nick explored in his post was using these representations to perform inference on the relational data: given two pieces of a triple <s p o>, can the model guess the third? In this post, we are going to talk about how these fixed dimensional representations can be used to extract and define semantically rich concepts from the underlying data. Before we begin, I should explain what I mean by concept extraction and definition. Given a collection of entities (people, locations, etc.) X, a concept is a subset Y of entities in X who are related by some collection of semantic features F. Here, I will call the discovery of the subset Y concept extraction, and the discovery of the collection F concept definition. Frequently, when looking for concepts in data, one tends to define a concept before extracting it. For example, when searching Google images for “fluffy cat”, I have defined a set of features F={fluffy, cat}, and the response Google gives—a collection of fluffy cat images—is the concept Y extracted for the feature set F. Alternatively, I can also start by building subsets Y which can be reasonably assumed to be semantically related, and derive the collection F based upon what I know about the elements of Y. An excellent example of this is a recommendation engine like the one at Netflix. Netflix has a collection Y of movies that they know I have watched and enjoyed and, based upon that set, they build up a collection of features F that are used to characterize my tastes as a moviegoer. The set F is then used to recommend movies that I would be interested in. In the work we have done at GA-CCRi, we have implemented both of these strategies and combine them with the goal of providing additional richness to our extracted concepts.

Concept Definition to Concept Extraction:

For relational data, there are a wealth of ways to define concepts. For example, one could define a concept as the set of entities who are connected to the entity Obama, or as the set of entities who are female (i.e. entities who complete the triple <? hasGender Female>). Depending on your dataset and the kinds of concepts one wishes to discover, there are a wide array of different restrictions one can place on the concept space; for our initial work in this area, we decided to focus on two specific kinds of concepts:

- collections of entities who—for a fixed predicate p and object o—complete the triple <? p o> (such as our <? hasGender Female> example above).

- collections of entities who—for a fixed subject s and predicate p—complete the triple

(such as the collection of entities who Obama knows: ).

Concept extraction based upon the data in the corpus is then just a simple lookup. After throwing away all concepts considered to be too infrequent (e.g. the concept defined by <? marriedTo Obama> has a single entity in it, and so it isn’t significant enough to be of value), we end up with a manageable set of concepts which the user can explore. In order to add an additional layer of richness to these concepts, GA-CCRi has developed two key augmentations which are intended to aide in information discovery:

- Inference: using Nick’s inference strategy from our last post, we can replace the question “which entities are part of concept C?” with the question “which entities can we infer are part of concept C?” For instance, we can infer which entities Obama knows by finding vectors nearby the vector Obama + knows in our vector space. This enables us to not just perform simple lookup for a given concept, but also to discover entities which belong to a given concept with a certain level of confidence.

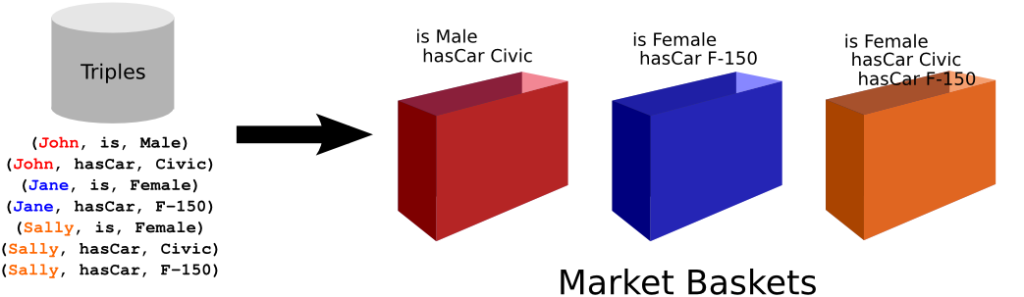

- Relationships between concepts: one important task that we wanted to enable is the ability to learn relationships between different concepts: specifically, which concepts tend to occur frequently together? To answer this question, we used tools from another area of data analysis called market basket analysis and specifically the problem of frequent itemset mining. One of the questions retail stores have asked for years is, given a collection of transactions, each of which contains a set of items, can we discover which sets of items are frequently purchased together? Many tools have been developed to address this problem and, although the problem isn’t solved by any means, there is a large amount of research showing ways to perform this kind of analysis at large scale for many (not too diabolical) data sets. Note that, if we think about entities as transactions and concepts which that entity is a part of as items on that entity’s transaction, the problem of finding concepts which frequently co-occur is exactly the problem of finding which items co-occur in many different transactions.

Concept Extraction to Concept Definition:

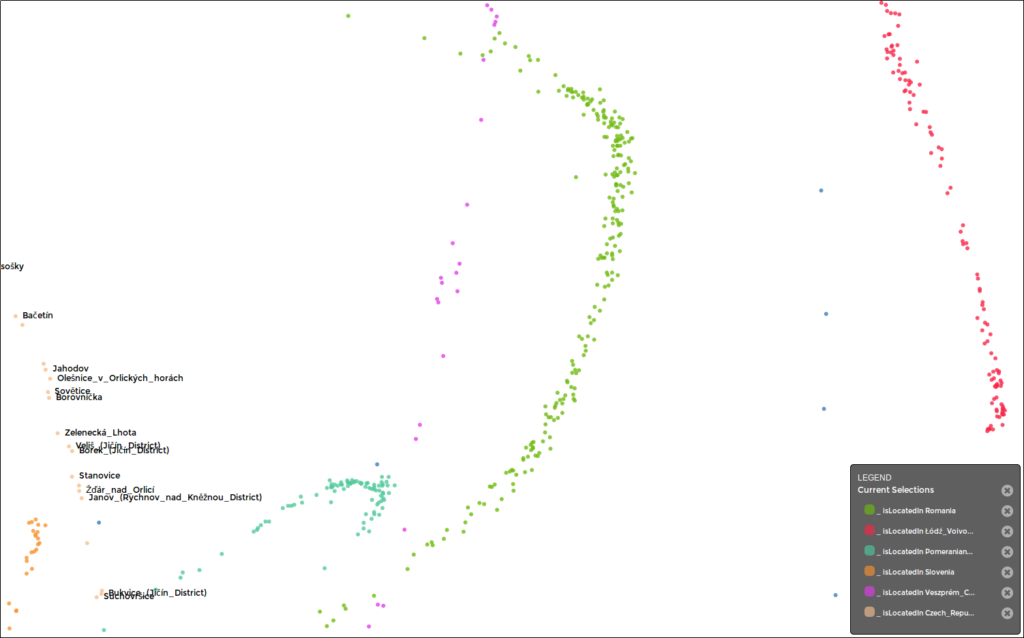

As we noted, it is also possible to perform concept extraction before concept definition as long as we have some reasonable way of automatically generating semantically related collections of entities. Fortunately, one of the fundamental aspects of our vector space embedding model from Nick’s post is that entities with similar vector representations tend to be semantically similar; this is why nearest neighbor search in our vector space is a query with semantic value. Thus, clustering entities in vector space should produce collections of entities which are semantically related. We can do this hierarchically to produce multiple levels of entity clusters (concepts) which also live in embedding space (take the mean of all vectors in a given cluster to get the embedding of the cluster). After extracting these concepts, we still need to define them in some way a user can understand. To do this, we extracted features for each entity and compared the feature distribution in each cluster to the global distribution. For each cluster, every feature whose distribution is significantly different from that of the population is flagged as a feature that defines the cluster in some way. For example, one of our clusters extracted from the YAGO dataset (wikipedia data) listed the following features isLocatedIn Romania: 98% (vs 1% globally) and isLocatedIn Neamt_County,_Romania: 88% (vs 0% globally) as cluster definition features.

Visualization:

Our final goal for this project was entity and concept visualization. To visualize entities, we take their vectors in embedding space and run a dimensionality reduction technique called t-SNE on them to project them into 2D. Thinking of these points as (lat, lng) pairs, we used GeoServer to quickly serve up these points to a user in a pannable, zoomable interface, where clusters replace individual points as the user zooms out in the application. Here, GA-CCRi was able to leverage our GeoMesa work in order to provide a backend which easily scales to hundreds of millions of points. Our first class of concepts, those which are defined then extracted, are enabled as facets in the interface which enable filtering and styling of the data.